I work on video generation and real-time interactive systems. My current research focuses on making video generation models more intelligent and running in real-time, helping the models to understand the world and interact with human. Previously, I built data pipelines and trained foundation models at ByteDance and AI startups.

Member of Technical Staff

Realtime world model

Santa Clara, CA | Jan 2026 - Present

Research Scientist

Human-centric video generation, large-scaledata pipeline, and real-time interactive generation.

San Jose, CA | Nov 2024 - Jan 2026

Machine Learning Engineer

Built data pipeline, trained video models.

San Francisco | Apr 2024 - Nov 2024

Research Engineer

Built data pipeline and trained foundation image/video models from scratch.

San Francisco | Jun 2023 - Apr 2024

Master Degree, Computer Science

2021 - 2022

Bachelor Degree, Computer Science and Mathematics

2017 - 2021

01 / BACKGROUND

Hey there, thanks for visiting my website. I am Tianpei Gu, currently a Member of Technical Staff at a stealth startup, based in Santa Clara, California. I like writing code and building things, and believe everyone should be full-stack in developing AI. Previously, I spent a couple years at GenAI startups/ByteDance training foundation image/video models and shipping products.

02 / RESEARCH

My current research focuses on human-centric video generation and real-time interactive systems, or world models. I believe image and video generation models are far more than just a toy where you enter a prompt and get an image — they will have a great impact on our daily lives, just like LLMs. These models should have intelligence, not just act like render engines. For all AI products, I have my own “mom benchmark”: how can this product be used by ordinary people like my mom? To make video models more accessible, they have to be real-time and interactive. To make them viral, they have to generate content that’s not only visually appealing but also emotionally engaging. With that goal in mind, I’m mainly working on the following areas.

03 / MISC

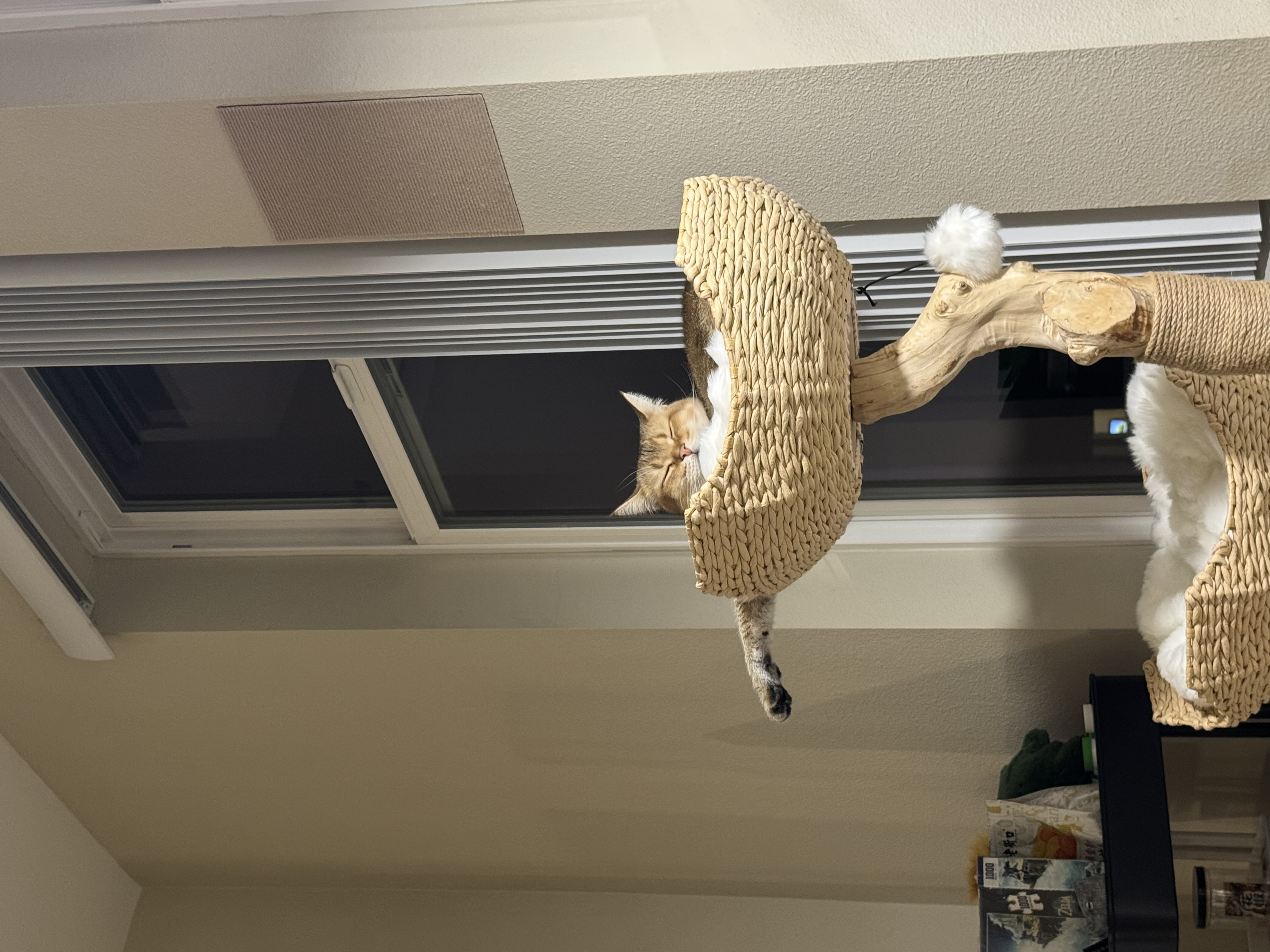

Apart from research, I enjoy exploring new technologies and creative applications of AI. I also enjoy cooking and playing Dota2. I have a cute cat named "贝壳" (Bayker in English) and I love to play with him. The below is a gallery of Bayker. You are welcome to see more on Instagram and Xiaohongshu.

The quickest way to reach me is by messaging me on X at @gutianpei_. If you prefer a more serious medium, feel free to send me an email at gutianpei@ucla.edu. For work-related inquiries, please send me an email at tianpei.gu@bytedance.com.

I try to make a point to respond to every message I receive. Some of my friends were strangers I decided to message on a whim.